This post is part of a series of posts showing how you might move an existing VDI deployment to Azure Virtual Desktop on Azure Stack HCI.

This particular post walks through the automation that does the heavy lifting of moving personal VMs from an RDS virtualization host to Azure Stack HCI as well as registering the VMs with the AVD control plane.

Now for the heavy lifting…

Not much to do… just find the RDS VMs in the infrastructure, shut them down, move them to the HCI cluster, start them up, install agents / register them with AVD, and remove them from the RDS deployment – easy peasy.

I showed in an earlier post how to collect the RDS info (including VM names and user assignments) – I’ll show you here again.

RDS Data Collection

Collecting the necessary details can be done programmatically if you know a little PowerShell and the name of your RDS Connection Broker host – here some sample code that I used to find the details of my deployment:

$RDSBroker = "RDSInfra.gbbgov.us"

$Collection = Get-RDRemoteDesktop -ConnectionBroker $RDSBroker

# get the User / VM mapping

$Details = Get-RDPersonalVirtualDesktopAssignment -CollectionName $Collection.CollectionName -ConnectionBroker $RDSBroker

Write "VMs to be migrated (with assigned users)"

$details

# find the Hyper-V Hosts use for Personal VMs

$Servers = Get-RDServer -Role "RDS-Virtualization" -ConnectionBroker $RDSBroker

What this returned in the $Details was a list of the personal RDS VMs in my environment assigned to RDS user – exactly what I want to migrate!

(node that this screen shot was taken AFTER I did the migration I’m showing in the blog posts – ignore the VM / user assignments…. this is not what I actually migrated!)

I’ll use the UserIDs later once the VMs are connected to AVD to assign them back to those same users, but for now I need just the VMname and the name of the Hyper-V host. Truth be told I only have one single “RDS-Virtualization” server in my infrastructure, so I really don’t need that collection of $Servers.

I didn’t bother to loop through all the one servers I have… I cheated and just used one single server from the collection:

$Server = $Servers[0](…you may need to update my sample code for your migration!).

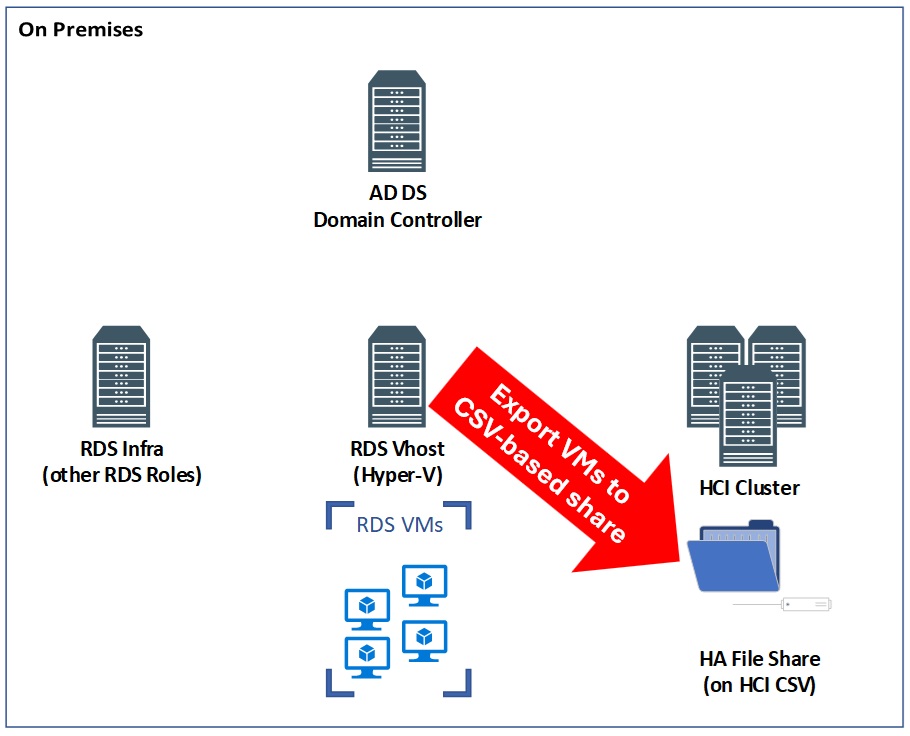

Anyhow, we need to “shutdown” these personal RDS VMs on that $Server and get them moved over from the RDS Virtualization host to the HCI cluster, as depicted below:

VM Shutdown / Export / Import

Before we get too far, let me say that the two PowerShell files I used for my migration can be found here – the rest of this post is really walking through the larger of the two.

To execute the migration between hosts, I’ll simply loop through the $Details collected earlier:

foreach ($Item in $Details)

{

$VM = get-VM -Name $Item.VirtualDesktopName -Computername $Server.Server

#$VM = get-VM -Name "Per-10" -Computername $Server.Server

$VMName = $VM.name

$ADComputer = get-ADComputer $VM.name

if($VM.State -eq "Saved")

{

Start-VM -Name $VMName -Computername $Server.Server

Start-Sleep -seconds 30 #let VM wake up

}

if($VM.State -eq "Running")

{

Stop-VM -Name $VMName -Computername $Server.Server -Force

}

The $Details had just the computer name in… not the full DNS name so I made sure to get that (to reduce name resolution issues!). I also started VMs that were “Saved” – I’m moving my VMs between processor manufacturers (AMD and Intel) and moving a “Saved” VM just will not work right! Once the VM is shutdown, then we can move on to exporting it.

There are a few variables I set before we got into the loop – I’ll share those as we go too:

$HCI_VM_Share = \\fslogix\VMsThis is an SMB share I configured on the HCI cluster as “HA” (so I could export the VMs from the RDS virtualization host) and homed on a Clustered Shared Volume (CSV) – perfect for hosting VMs once they are registered with the new cluster.

I set the name of the HCI Node I will be working with:

$HCI_Node = "HCI.gbbgov.us"…and also set the following to point to the same location as the share… but local to the cluster:

$HCI_VM_path = "C:\ClusterStorage\Volume1\shares\VMs"With those pieces of additional information, I can export a VM from my single Hyper-V host to the HCI cluster, import it, and make it highly available:

Export-VM -Name $VMName -Computername $Server.Server -Path $HCI_VM_Share

$VM_find_location = $HCI_VM_Share + "\" + $VMName + "\*.vmcx"

$File = get-childitem -path $VM_find_location -Recurse

$FileLocation = $HCI_VM_Path + "\" + $VMName + "\" + $File.name

Invoke-Command -ComputerName $HCI_Node -ScriptBlock {

Get-ChildItem -Path $Using:FileLocation -Recurse | Import-VM -Register | Get-VM | Add-ClusterVirtualMachineRole

}

The export is the really time consuming part of the process… in my environment (over a single gigE port) the process seemed to take about 20-30 seconds per GB of VHDX file, so a 40GB VHDX took about 15 minutes – still much faster than moving to Azure over my cable modem!

The VM should now be installed on the HCI cluster, but not yet powered up, or registered with AVD.

Powering Up / Agent Install / User Re-Assignment

We’ll need to power up the VMs on the HCI cluster (NOT on the RDS Virtualization Host…not ever again there!). Once the VM is online the process of install the AVD Agent and bootloader with the Host Pool registration key we saved from Azure earlier should register the VM with AVD.

Start-VM -Name $VMName -Computername $HCI_Node

Start-Sleep -seconds 60 #let VM wake up

I let the process sleep a little to make sure the VM was online and to allow DNS to settle down (if the IP address changed), since the next step relies on being able to copy the agent installs to the VM and run them!

$DestinationPath = -Join("\\", $ADComputer.DNSHostName, "\C$\Installs")

If (!(test-path $DestinationPath)) { md $DestinationPath }

Copy-Item $WorkDIR -Destination $DestinationPathI did have the above fail once due to timing on the VM (something related to DNS… it’s always DNS!), but re-ran and it worked…just (I supposed a timing issue – maybe a longer timeout or other check may be in order!

The line below calls a script out on a share to run the agent install:

# forgot to show you these earlier! It's where the install script lives!

$WorkPath = "\\rdsinfra\installs"

$ScriptPath = $WorkPath + "\RemoteAVDInstall.PS1"

Invoke-Command -FilePath $ScriptPath -ComputerName $ADComputer.DNSHostNameBefore the Agent installation, there are no session hosts registered with the host pool:

Once the agents all successfully installed, they VMs should register (with the host pool registration key) and the VMs will appear as connected.

After Agent installation we will use the VM user assignment info from $Details to link the user to their old VM:

$User = $item.user -split "\\"

#$User = $item[0].user -split "\\"

$ADUser = get-ADuser -Identity $user[1]

Update-AzWvdSessionHost -HostPoolName $AVDHostPool -ResourceGroupName $AZResourceGroup -SubscriptionId $AZSubscriptionID -Name $ADComputer.DNSHostName -AssignedUser $ADUser.UserPrincipalName

The VMs and their assigned users will show connected to the host pool:

Cleanup

The last remaining step I did (as part of the “big loop”) was to remove the VMs from the legacy RDS deployment:

Remove-RDVirtualDesktopFromCollection -CollectionName $Collection.CollectionName -VirtualDesktopName @($VMName) -ConnectionBroker $RDSBroker -Force

} #that closes "the big loop"

You certainly can do further cleanup, including the removal of the VMs from Hyper-V as well as the deletion of their corresponding VHD/VHDX disk files. At this point the RDS Deployment may still have available VMs, but all those which had been assigned to users should have been migrated (maybe it’s time to shut down that RDS deployment!) – it should look something like this (note the lack of VMs with user assignments:

Your HCI cluster should have a bunch of VMs in Admin Center, Hyper-V Manager, and Failover Cluster Manager:

…the VMs also show up in Hyper-V manager and Failover Cluster Manager (as expected).

With the VMs moved to Azure Stack HCI and registered with AVD, next we’ll need to get our users to connect through the new control plane.